The so-called master-transaction update is one of the, if not the defining algorithms of the discipline formerly known as "data processing". Given two sorted files of records with increasing keys, the process applies each record in the transaction file to each record of the the master file and outputs the result, if any, to the updated master file in one pass over each input. The same algorithm can compute the union, intersection or difference of sorted sequences. For instance, the union of two sets represented as sorted lists of unique elements is:

let union =

let rec go l r = match l, r with

| [], xs | xs, [] -> xs

| x :: xs, y :: ys ->

match compare x y with

| -1 -> x :: go xs (y :: ys)

| 0 -> x :: go xs ys

| 1 -> y :: go (x :: xs) ys

| _ -> assert false

in go

Intersection is:

let inter =

let rec go l r = match l, r with

| [], _ | _, [] -> []

| x :: xs, y :: ys ->

match compare x y with

| -1 -> go xs (y :: ys)

| 0 -> x :: go xs ys

| 1 -> go (x :: xs) ys

| _ -> assert false

in go

while difference is:

let diff =

let rec go l r = match l, r with

| [], _ | _, [] -> l

| x :: xs, y :: ys ->

match compare x y with

| -1 -> x :: go xs (y :: ys)

| 0 -> go xs ys

| 1 -> go (x :: xs) ys

| _ -> assert false

in go

And so on. The three functions use the same underlying merge schemata; what varies is the operation to perform in each of the five possible cases:

- The left sequence is nil

- The right sequence is nil

- The next element in the left sequence is less than the next element in the right sequence

- The next element in the left sequence is equal to the next element in the right sequence

- The next element in the left sequence is greater than the next element in the right sequence

The question is, then, how many set operations can the merge algorithm implement? These five cases partition both input sequences in disjoint sets such that the output sequence is the natural merge of zero or more of them. For example, processing the sets { 1, 3, 4, 6, 7, 8 } ⋈ { 2, 3, 5, 6, 8, 9 } results in the following steps:

| LN | RN | LT | EQ | GT | Arguments |

|---|---|---|---|---|---|

| 1 | { 1, 3, 4, 6, 7, 8 } ⋈ { 2, 3, 5, 6, 8, 9, 10 } | ||||

| 2 | { 3, 4, 6, 7, 8 } ⋈ { 2, 3, 5, 6, 8, 9, 10 } | ||||

| 3 | { 3, 4, 6, 7, 8 } ⋈ { 3, 5, 6, 8, 9, 10 } | ||||

| 4 | { 4, 6, 7, 8 } ⋈ { 5, 6, 8, 9, 10 } | ||||

| 5 | { 6, 7, 8 } ⋈ { 5, 6, 8, 9, 10 } | ||||

| 6 | { 6, 7, 8 } ⋈ { 6, 8, 9, 10 } | ||||

| 7 | { 7, 8 } ⋈ { 8, 9, 10 } | ||||

| 8 | { 8 } ⋈ { 9, 10 } | ||||

| 9,10 | ∅ ⋈ { 9, 10 } |

Abstracting away the operations to perform in each of these five cases we have the following schema:

let merge ln rn lt eq gt : 'a list -> 'a list -> 'a list =

let rec go l r = match l, r with

| [], ys -> ln ys

| xs, [] -> rn xs

| x :: xs, y :: ys ->

match compare x y with

| -1 -> lt x (go xs (y :: ys))

| 0 -> eq x (go xs ys )

| 1 -> gt y (go (x :: xs) ys )

| _ -> assert false

in go

Both ln and rn must decide what to do with the remaining list and so have type α list → α list, while lt, eq and gt must decide what to do with the element in consideration and so have type α → α list → α list; thus the type of merge is (α list → α list) → (α list → α list) → (α → α list → α list) → (α → α list → α list) → (α → α list → α list) → α list → α list → α list. The operations on the remainder either pass it unchanged or return nil, while the operations on the next element either add it to the output sequence or not:

let self xs = xs and null _ = [] and cons x xs = x :: xs and tail _ xs = xs

(some of these have well-known names in functional programming, but here I choose to use these neat, four-letter ones.) With the proviso that the output sequence must be increasing these four functions exhaust the possibilities by parametricity; otherwise, duplications and rearrangements would satisfy the parametric signature. Now union, intersection and difference are simply:

let union l r = merge self self cons cons cons l r and inter l r = merge null null tail cons tail l r and diff l r = merge null self cons tail tail l r

It is obvious that the question I posed above is answered as 25 = 32 possible set operations obtainable by varying each of the five operations. The next question is, then, what are these 32 operations? Let me characterize each of the five sets ln, rn, lt, eq and gt. The easiest one is eq, as it obviously is the intersection of both sets:

eq(A, B) = A ∩ B

By substitution in merge it is possible to show that ln(A, B) = rn(B, A) and vice versa; hence just one set expression suffices. The merge ends with rn for every element in A that is greater than every element in B, as the latter were included in the comparison sets; and conversely for ln. Hence:

ln(A, B) = B / A ≡ ⟨S y : B : ⟨∀ x : A : y > x⟩⟩ rn(A, B) = A / B ≡ ⟨S x : A : ⟨∀ y : B : x > y⟩⟩

(read A / B as "A over B"). All three sets are pairwise disjoint, since A / B ⊆ A and A / B ∩ B = ∅, and conversely, by construction.

The two remaining sets are also symmetric in the sense that lt(A, B) = gt(B, A) but are more difficult to characterize. My first attempt was to think of each element in A as being processed in turn and put into lt(A, B) just when strictly less than all the elements in B against which it could be matched, namely lt(A, B) = ⟨S x : A : x < ⟨min y : B : x ≤ y⟩⟩. The condition can be simplified with a bit of equational reasoning:

x∈A ∧ x < ⟨min y : B : x ≤ y⟩

≡ { GLB }

x∈A ∧ ⟨∀ y : y∈B ∧ x ≤ y : x < y⟩⟩

≡ { Trading }

x∈A ∧ ⟨∀ y : y∈B : x > y ∨ x < y⟩⟩

≡ { Trichotomy }

x∈A ∧ ⟨∀ y : y∈B : x ≠ y⟩⟩

≡

x∈A ∧ x∉B

In other words, A − B. The problem is that, since the quantification over an empty set is trivially true, this set is too big as it includes the respective remainder; that is to say A / B ⊆ A − B as I showed above. To preserve disjointness I define:

lt(A, B) = A − B − A / B gt(A, B) = B − A − B / A

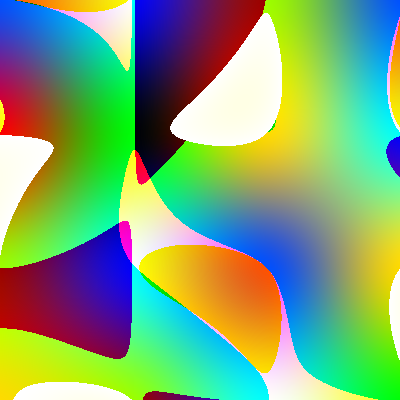

In a Venn diagram, these five sets are:

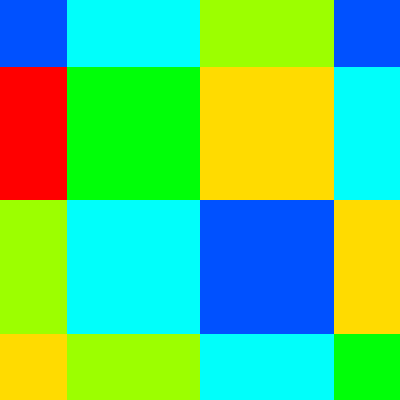

So by including or excluding one of the five components depending on the function passed to each of the five operations, the 32 set operations achievable by merge are:

Or in tabular form:

| N | ln | rn | lt | eq | gt | Function | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | self | self | cons | cons | cons | A∪B | ||||||||

| 1 | self | self | cons | cons | tail | A | ∪ | B/A | ||||||

| 2 | self | self | cons | tail | cons | A∆B | ||||||||

| 3 | self | self | cons | tail | tail | A−B | ∪ | B/A | ||||||

| 4 | self | self | tail | cons | cons | B | ∪ | A/B | ||||||

| 5 | self | self | tail | cons | tail | A∩B | ∪ | B/A | ∪ | A/B | ||||

| 6 | self | self | tail | tail | cons | B−A | ∪ | A/B | ||||||

| 7 | self | self | tail | tail | tail | B/A | ∪ | A/B | ||||||

| 8 | self | null | cons | cons | cons | A∪B | − | A/B | ||||||

| 9 | self | null | cons | cons | tail | A | ∪ | B/A | − | A/B | ||||

| 10 | self | null | cons | tail | cons | A∆B | − | A/B | ||||||

| 11 | self | null | cons | tail | tail | A−B | ∪ | B/A | − | A/B | ||||

| 12 | self | null | tail | cons | cons | B | ||||||||

| 13 | self | null | tail | cons | tail | A∩B | ∪ | B/A | ||||||

| 14 | self | null | tail | tail | cons | B−A | ||||||||

| 15 | self | null | tail | tail | tail | B/A | ||||||||

| 16 | null | self | cons | cons | cons | A∪B | − | B/A | ||||||

| 17 | null | self | cons | cons | tail | A | ||||||||

| 18 | null | self | cons | tail | cons | A∆B | − | B/A | ||||||

| 19 | null | self | cons | tail | tail | A−B | ||||||||

| 20 | null | self | tail | cons | cons | B | − | B/A | ∪ | A/B | ||||

| 21 | null | self | tail | cons | tail | A∩B | ∪ | A/B | ||||||

| 22 | null | self | tail | tail | cons | B−A | − | B/A | ∪ | A/B | ||||

| 23 | null | self | tail | tail | tail | A/B | ||||||||

| 24 | null | null | cons | cons | cons | A∪B | − | B/A | − | A/B | ||||

| 25 | null | null | cons | cons | tail | A | − | A/B | ||||||

| 26 | null | null | cons | tail | cons | A∆B | − | B/A | − | A/B | ||||

| 27 | null | null | cons | tail | tail | A−B | − | A/B | ||||||

| 28 | null | null | tail | cons | cons | B | − | B/A | ||||||

| 29 | null | null | tail | cons | tail | A∩B | ||||||||

| 30 | null | null | tail | tail | cons | B−A | − | B/A | ||||||

| 31 | null | null | tail | tail | tail | ∅ | ||||||||

Arguably, besides the traditional five set operations A ∪ B, A ∩ B, A − B, B − A and A ∆ B, only the remainders A / B, B / A and perhaps A / B ∪ B / A = A ⊔ B, the join of A and B (not to be confused with the relational operation), are independently useful. These three are obscure, and as far as I know have no name, although I'd love to hear if there is literature about them. This might mean that this exhaustive catalog of set merges is rather pointless, but at least now I know for sure.